Manufacturing Consciousness: Narrative Automata and the Mediation of Thought Part 2 / 6

From Automata to Autopoiesis

This is Part 2 of a six-part essay exploring how AI systems are reshaping narrative and consciousness through what I call "narrative automata." If you haven't read Part 1, which introduces the theoretical framework and charts the course of the essay, I recommend starting there before continuing.

II. From Automata to Autopoiesis

In Part I of this essay, I introduced the concept of "narrative automata"—systems driven by interacting AI agents capable of generating emergent stories and behaviors. To understand their significance and the questions they raise, it's crucial to trace their lineage, a path winding through computational theory, Cold War strategy, biology, and philosophy. This history reveals an evolution in how we think about systems and complexity, moving from predictable rule-based worlds to the unpredictable, generative landscapes inhabited by contemporary AI.

The journey arguably begins with the foundational work of John von Neumann in the 1940s and 50s. Working in the nascent era of digital computation, von Neumann explored the theoretical possibility of self-replicating machines through the concept of cellular automaton. These are discrete models, often visualized as a grid of cells, where each cell's state (e.g., on/off, alive/dead) changes over time based on a predefined set of simple rules concerning the states of its immediate neighbors. Think of it like a vast game of cosmic checkers where the pieces move themselves according to local laws. Von Neumann's highly technical research, documented posthumously in Theory of Self-Reproducing Automata, established the theoretical groundwork showing how systems governed by simple, local interactions could produce global complexity, replicate themselves, and even perform universal computation.

While deeply mathematical, the implications were profound, suggesting a way to understand emergence, or how complex wholes arise from simple parts, without invoking vital forces or centralized blueprints. These ideas resonated with the parallel development of cybernetics, spearheaded by figures like Norbert Wiener with his seminal publication of Cybernetics: Or Control and Communication in the Animal and the Machine in 1948, which explored feedback, control, and information in both machines and living organisms. This framework stemmed directly from Wiener's experiences during the Second World War. The practical challenge of using feedback loops to accurately track aircraft for targeting systems revealed fundamental principles applicable far beyond military hardware. Through his understanding of feedback, Wiener sought to unify the underlying processes governing biological mechanisms with those he helped develop in computation, arguing for a universal science of control and communication shared between machines and living organisms.

His work was not only brimming with the potential to create mathematical frameworks for systems of life and intelligence itself, but it also carried the weight of how scientific research in cybernetics was being weaponized by the military. Wiener also foresaw the societal effects of increasing automation and, reflecting his ethical concerns in the post-war era, famously disavowed military-funded research. This exploration of how complex, seemingly purposeful behavior could arise from underlying systemic rules set the stage for further investigations into emergent complexity, which is neatly summarized in this quote from his follow up text in The Human Use of Human Beings:

Society can only be understood through a study of the messages and the communication facilities which belong to it; and that in the future development of these messages and communication facilities, messages between man and machines, between machines and man, and between machine and machine, are destined to play an ever-increasing part.

It was John Conway's Game of Life, however, introduced to a mass audience via Martin Gardner's influential "Mathematical Games" column in the October 1970 issue of Scientific American, that truly ignited the public imagination. Conway introduced a truly captivating example of a cellular automaton, a zero-player "game" where an initial pattern of 'living' cells on a grid evolves based on exceedingly simple rules governing birth, death, and survival simply based on rules formed from neighboring cells. Despite the deterministic rules, the results are mesmerizingly complex and often unpredictable: stable structures ("still lifes"), oscillators ("blinkers"), moving patterns ("gliders" – tiny configurations that "travel" across the grid), and intricate "machines" emerge and interact, shadowing some of the rules postulated by the earlier Gestalts.

Game of Life became a cultural phenomenon in early computing circles, demonstrating vividly that intricate, seemingly purposeful, and 'life-like' behavior could arise spontaneously from simple, local, deterministic interactions. It fueled decades of exploration in artificial life, complexity science, and even generative art. The allure was, and remains, the visible unfolding of complexity from simplicity, a kind of "order for free," as complexity theorist Stuart Kauffman would later explore.

This fascination with rule-based simulation quickly intersected with the anxieties and strategic demands of the Cold War. Institutions like the RAND Corporation became crucibles for applying computational thinking and game theory to model geopolitical conflict and human decision-making under extreme pressure. Herman Kahn, a prominent and often controversial figure at RAND, exemplified this trend. His work, particularly the extensive analysis in On Thermonuclear War, employed scenario planning and systems analysis to explore the potential outcomes and strategies of nuclear conflict. Kahn’s aim was to make the “unthinkable thinkable,” arguing that rational analysis could be applied even to nuclear catastrophe. This mindset was famously, and chillingly, satirized in the character of Dr. Strangelove in Stanley Kubrick’s 1964 film, a figure partly inspired by Kahn.

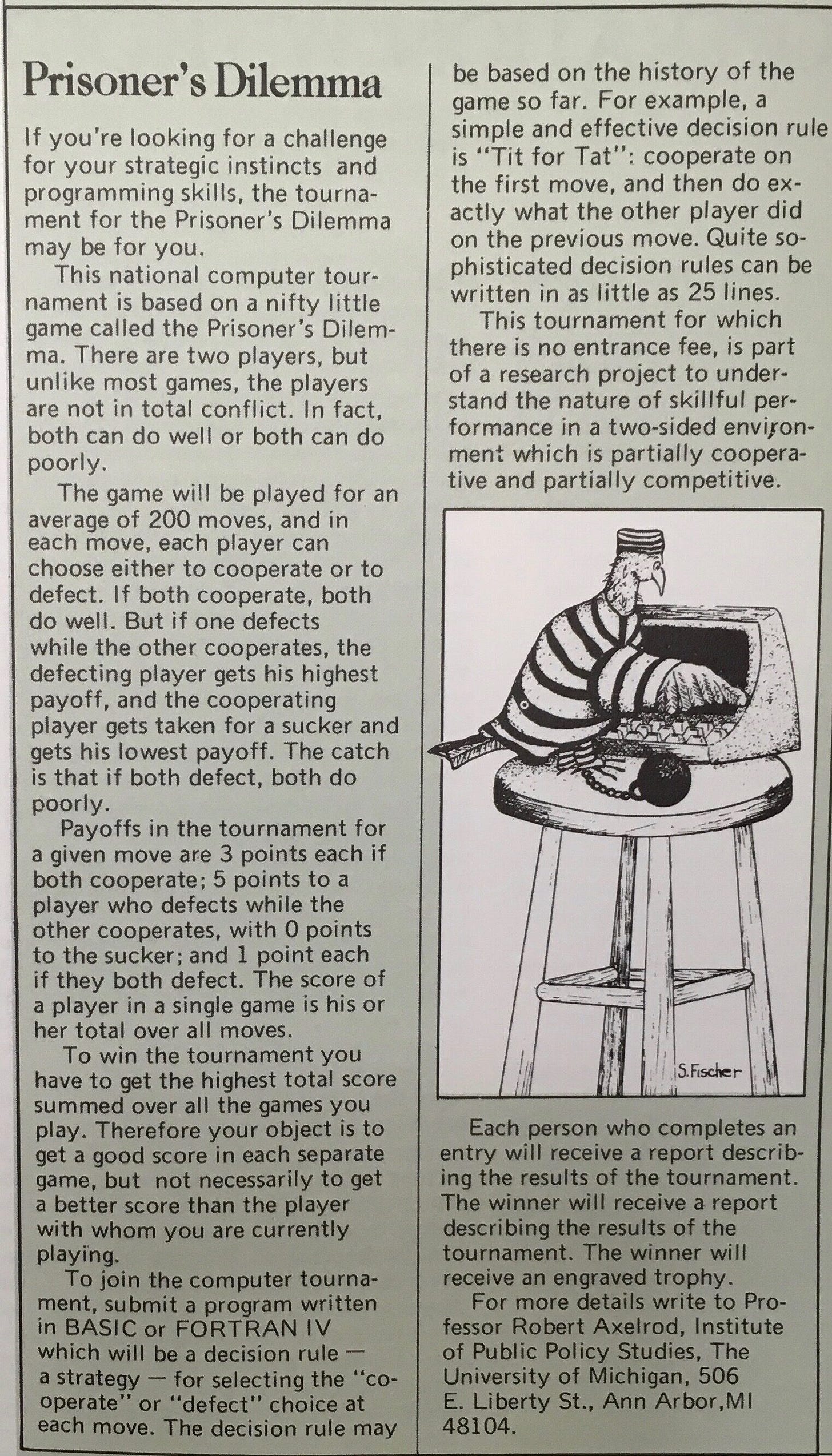

These early simulations, alongside developments in game theory like the Prisoner's Dilemma, marked a critical moment: the extension of computational logic into the realm of human interaction and global strategy. They sought predictability and control, attempting to map complex, messy human realities onto formal systems. This era saw the rise of strategic modeling that influenced actual policy, exploring concepts like deterrence, escalation, and even limited nuclear exchanges. Robert Axelrod's later computer tournaments in the 1980s exploring the Prisoner's Dilemma fascinatingly showed how a simple reciprocal strategy ("Tit for Tat") could foster cooperation emergence even in adversarial contexts, offering a counterpoint to purely conflictual models.

However, this approach faced significant criticism. Historian of science Paul Edwards, in his crucial study The Closed World: Computers and the Politics of Discourse in Cold War America, argued that these Cold War simulations created a "closed world discourse." By reducing complex geopolitical and human factors to quantifiable parameters within a computational model, they created a self-contained reality that reflected the biases and objectives of their creators (predominantly military strategists), potentially obscuring alternative perspectives and the profound uncertainties and ethical dimensions involved. This critique resonates today as we consider the potential biases embedded within the vast datasets and architectures of LLMs used in modern simulations.

The conceptual journey I am embarking upon in this essay, from these rule-based, often deterministic simulations towards the generative potential of contemporary narrative automata, also involves embracing ideas from biology and non-equilibrium physics. Biologists Humberto Maturana and Francisco Varela introduced the concept of "autopoiesis" (literally "self-creation") in the early 1970s (detailed in Autopoiesis and Cognition: The Realization of the Living). Autopoiesis describes systems, prototypically biological cells, defined by their operational closure: they continuously regenerate the network of processes and components that produce them, thereby maintaining their own distinct organization and boundaries against the environment. Their primary "purpose" is self-maintenance, often tending towards homeostasis or internal stability.

Contemporary LLMs, especially when networked into interacting multi agent-based systems like those in my artworks, suggest a technical analogue – perhaps termed technopoiesis. While they process information and generate outputs based on their internal structure (the model's weights and architecture), their behavior differs crucially from the homeostatic drive of classical autopoiesis. Narrative automata, fueled by LLMs, seem to thrive on disequilibrium. They don't seek a stable endpoint; their "aliveness", much like in Game of Life, comes from the continuous generation of novelty, difference, and narrative tension through neighboring chains of interaction.

This resonates strongly with the work of Nobel laureate Ilya Prigogine who wrote about dissipative structures in Order Out of Chaos: Man's New Dialogue with Nature in 1984. These are complex systems (physical, chemical, even social) that maintain their organization far from thermodynamic equilibrium by constantly exchanging energy and matter with their environment, effectively "dissipating" entropy. Think of a flame or a living organism – they require a constant flow to maintain their complex form. Narrative automata function similarly, sustaining their generative dynamic through the continuous production and processing of narrative "energy". They seek conflict, uncertainty, and discovery, rather than settling into static resolution. They are engines of perpetual difference.

This idea of an underlying generative potential connects, perhaps more metaphorically, to physicist David Bohm's philosophy of the implicate order developed in 1980. Detailed in Wholeness and the Implicate Order, he proposed a vision of reality where the tangible world we perceive (the "explicate order") is an unfolding from a deeper, enfolded, and interconnected level of potentiality (the "implicate order"). Narrative automata can be seen as enacting a similar process: they "unfold" specific narratives (explicate order) from the vast, high-dimensional latent space of semantic possibilities encoded within their language models (a kind of implicate order). Each generated interaction isn't just a story element; it subtly reshapes the probability landscape for what comes next, constantly reconfiguring the relationship between the potential and the actual.

This trajectory, from von Neumann's deterministic grids to Conway's emergent complexity, through RAND's strategic simulations and their critiques, to concepts of self-production, non-equilibrium dynamics, and enfolded potential, highlights a fundamental shift. We have moved from systems where complexity emerges from fixed rules to systems where complexity is probabilistically generated from learned patterns within vast possibility spaces. The early cellular automata were predictable in principle; the outputs of LLM-driven narrative automata are stochastic, inherently unpredictable even if statistically patterned, reflecting the probabilistic nature of the underlying models. This mirrors science's broader shift from Newtonian determinism towards quantum indeterminacy and the dynamics of complex, open systems.

The stakes of this evolution are immense, moving far beyond theoretical models or geopolitical simulations. These generative systems are now increasingly interwoven with our daily communication, our access to information, and the very processes of thought and cultural production. As we delegate more cognitive and creative tasks to these systems, we engage in what philosopher Bernard Stiegler described as "technogenesis" or a technical coevolution where humans and technologies are no longer separate entities.

The tools we create, from language to large language models, are actively reconfiguring our minds and even entire societies. The historical and theoretical context presented here, from the first flickers of artificial life in cellular automata to the complex, generative, and world-shaping potential of modern AI, is essential to understanding the influence of automation. It further frames the critical questions my artworks, Conflicts, Manufacturing Consciousness, and The Game of Whispers, seek to explore: How do these narrative automata function? What kinds of consciousness do they simulate or participate in? And as they become increasingly integrated into our lives, how are they manufacturing not just stories, but potentially, our understanding of ourselves and the world?

Missed part 1? Read it here. Or ready for Part 3? Catch it here, where I start to delve into my artworks exploring how these AI systems shape empathy, discourse, and historical understanding, starting with Conflicts, an artwork exploring what happens when the messy, intimate details of past relationships are fed into an algorithmic crucible of large language models (LLMs) and reflected back onto us.