Manufacturing Consciousness: Narrative Automata and the Mediation of Thought Part 1 / 6

The New Landscape of Consciousness

I. Introduction: The New Landscape of Consciousness

In 2023, Stanford researchers released a paper entitled Generative Agents: Interactive Simulacra of Human Behavior in which they showed how a multi-agent society backed by large language models (LLMs), could exhibit emergent forms of behaviors. Such a form of storytelling was so profound to me that I then developed an entire course at UCLA on the material: Cultural Automation with Machine Learning. In it, I ask my students, “What happens when AI becomes so increasingly pervasive and inserted into systems of automation that it becomes responsible for the manufacturing of culture?” These questions have also led me to explore multi-agent AI environments in my own artworks, where LLMs interact with one another producing what I call "narrative automata": narrative systems capable of generating emergent behaviors through their interactions.

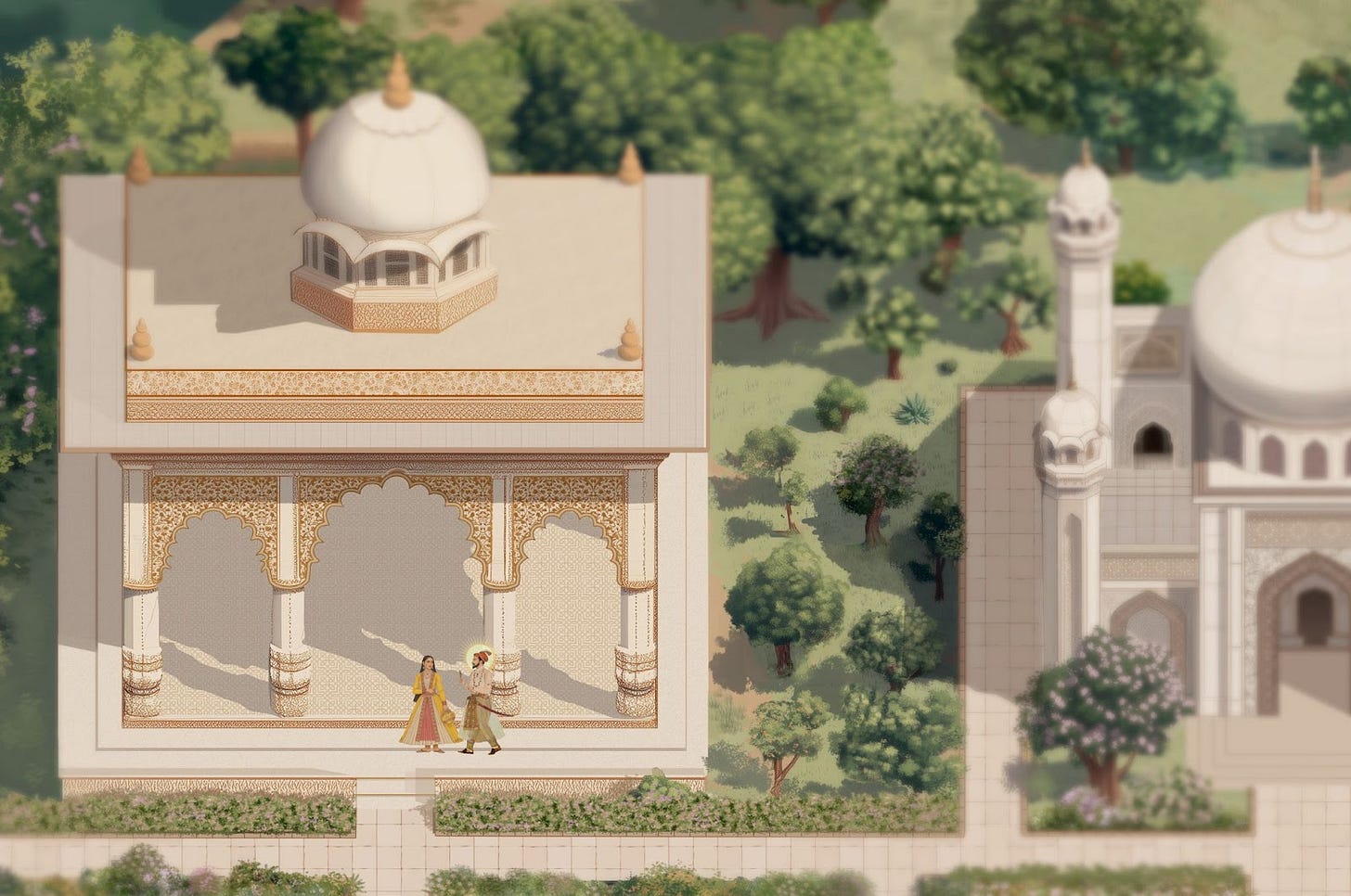

In this six-part essay, I will discuss some of my latest artworks, Conflicts, Manufacturing Consciousness, and The Game of Whispers, which trace my exploration of these narrative automata across different scales and contexts. From simulating personal relationship trauma in Conflicts to historical empire simulations in The Game of Whispers, to a speculative billboard ad at the reclaimed disaster site of the Three Mile Island nuclear reactor in Manufacturing Consciousness, each of these works directly comments on the AI it utilizes as an active participant of the experience rather than as a passive tool.

This artistic inquiry connects to a broader theoretical concern: As language models and artificial intelligence systems are increasingly integrated into our daily lives, they are also increasingly mediating our thought processes. This mediation recalls Walter Lippmann's concept of the "manufacture of consent," later developed by Noam Chomsky and Edward Herman, which articulated how mass media functions as a system for communicating messages favorable to political and economic elites. Today, we face a far more profound transformation: not merely the manufacturing of consent, but the manufacturing of consciousness itself. What biases might be manufactured in the LLMs that increasingly suggest the words we type and power our keyboards?

These biases are not merely accidental but often deliberately programmed. Recent examples like DeepSeek, a Chinese-developed LLM, demonstrate how these systems can be explicitly programmed to avoid discussing politically sensitive topics like the Tiananmen Square protests. Similarly, different LLMs may show systematic biases toward particular political viewpoints or cultural assumptions depending on their training data and design choices. As these systems become more integrated into our information infrastructure, these biases increasingly shape our cognitive environment without our conscious awareness.

The influence of language models on our cognition may already be evident in subtle linguistic shifts. Anecdotally, I have observed a pattern of increased usage of certain terms in AI-assisted writing. While developing The Game of Whispers, we encountered this phenomenon directly as the AI-backed characters persistently included the word "vigilant" in their dialogues despite explicitly prompting them to never say this word. We later joked when looking at our e-mail to see countless headlines with the same word spammed everywhere. Such linguistic homogenization may by exacerbated by widespread AI adoption and represents a subtle but potentially profound reshaping of our expressive capabilities.

It is increasingly clear that AI is changing how we think. As Andy Clark and David Chalmers argue in their extended mind hypothesis, cognitive processes do not stop at the boundaries of skin and skull but extend into our technological environments such as by externalizing our memory into pen and paper or our smartphones. The language models powering contemporary AI represent an unprecedented extension of this principle, as we increasingly offload not just memory but the formulation of thought itself onto these systems. These neural networks are finally living up to their namesake and have become extra-cortical layers of perception, extending our cognitive architecture into the digital realm. This realization of computational augmentation of human cognition builds upon Douglas Engelbart's pioneering vision from the 1960s when he established the Augmentation Research Center at Stanford Research Institute, where they developed the mouse and early collaborative computing systems specifically designed to enhance human intellectual capabilities.

Further, if we believe consciousness itself is an emergent property arising from the complex interactions of neural systems, then these extra-cortical layers may too participate in the emergence of new forms of consciousness that span the biological-digital divide. When combined with Katherine Hayles' concept of technogenesis, the idea that humans and technics coevolve, we can begin to see how these new forms of mediation are not just changing how we think but what constitutes thought and consciousness itself.

This essay examines how narrative automata provide both a method for creating emergent storytelling and a critical lens through which to examine the broader implications of AI-mediated consciousness. As society integrates these technologies into cognitive processes, the question of who controls their implicit and perhaps even explicit biases and the manufacturing of consciousness becomes increasingly pressing.

–

Ready for Part 2? Read it here, where I further contextual the notion of narrative automata and its history before discussing some of my latest artworks, Conflicts, Manufacturing Consciousness, and The Game of Whispers, which explore how AI systems can shape our capacity to empathize, subtly manufacture collective discourse, and even alter our historical understanding. These works expand the concept of narrative automata to examine polarized debate and disinformation dynamics, revealing how language models don't just reflect our thinking but actively participate in manufacturing consciousness at increasingly larger scales.