Manufacturing Consciousness: Narrative Automata and the Mediation of Thought Part 3 / 6

Conflicts: The Personal as Procedural Trauma Simulation

This is Part 3 of a six-part essay exploring how artificial intelligence, particularly through the lens of "narrative automata," is shaping human consciousness. In Part 1, I introduced the core concepts and the overarching theme of the "manufacturing of consciousness." In Part 2, I traced the historical and theoretical lineage from early automata and cybernetics to contemporary ideas like autopoiesis and dissipative structures. If you missed them, you can catch up here: [Introduction: The New Landscape of Consciousness Part 1 / 6] and [From Automata to Autopoiesis Part 2 / 6].

Now, we delve into the first of three artworks that explores these ideas: "Conflicts."

III. Conflicts: The Personal as Procedural Trauma Simulation

Conflicts (2024) is a deeply personal, experimental, and generative dive into the turbulence of human connection, trauma, and memory. It is a multi-media piece born from asking: what happens when the messy, intimate details of past relationships are fed into an algorithmic crucible of large language models (LLMs) and reflected back onto us? Can a simulation of past relationship conflicts illuminate patterns of failed relational dynamics, acting as a mirror of oneself, perhaps even teaching us to empathize with one another’s perspective? Or does it merely create an uncanny, unsettling echo, potentially even furthering the effects of the trauma we sought to escape by breaking up with one another?

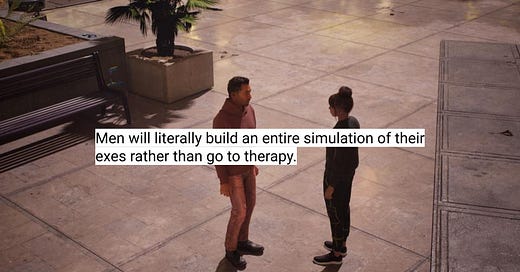

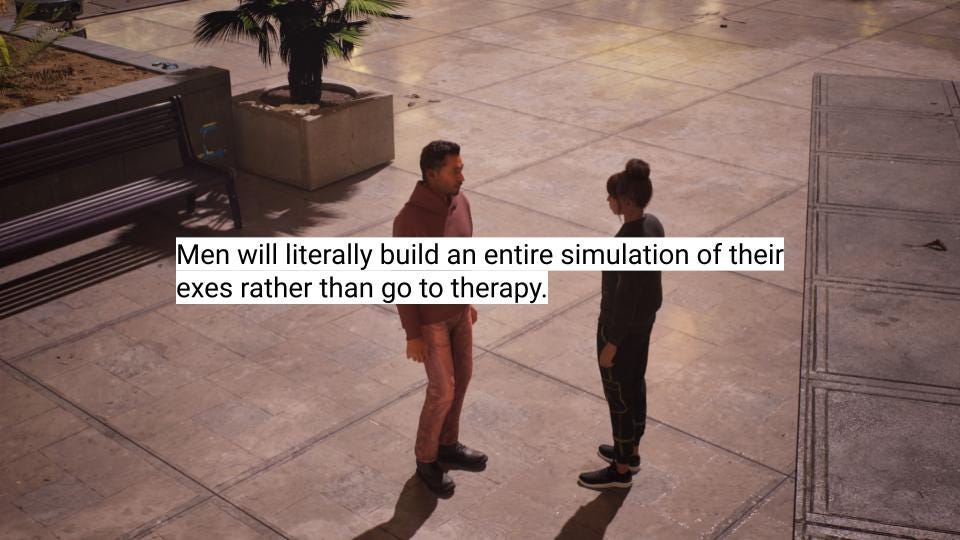

At its core, Conflicts stages an encounter between two narrative automata engaged in an endless debate of past conflicts. The stage is a city scene inspired by downtown Los Angeles, where the two AI-driven non-player characters (NPCs) can interact. But these aren't generic NPCs; they are simulations intended to personify myself and my former romantic partners. Their personalities, conversational styles, and even their perspectives on each other are derived from a so-called "relationship exit survey"—questionnaires initially tested on close friends before being sent to the actual ex-partners involved. The form contained a wide range of optional and open-ended questions stemming from the introductory “describe yourself” to the more pointed and personal “describe the main sources of conflict you had with Parag.” There were even questions surrounding what data would be allowed such as “would you be willing to use previous direct messages with Parag in the training of the LLM?”.

The technical implementation involves a bespoke conversational AI pipeline enabling two LLMs, each embodying a surveyed individual, to converse with one another. Custom metahuman avatars were built based on responses from the questionnaire, and provide the LLMs with a visually and spatially embodied representation inside of a game engine. When the simulation starts, the result is a generative system reminiscent of Conway's Game of Life, but infused with the complexities of my own personal relationships. Amidst a backdrop of a city simulated with autonomous traffic and crowds, the two characters engage in an endless, unscripted series of reconstituted conflicts. Each simulation run is unique; the conversations emerge probabilistically from the models' latent space, influenced by the survey data and the unfolding interaction itself. The narrative isn't pre-determined; it's an emergent property of the interacting agents, a narrative automaton playing out interpersonal dynamics.

After completing most of the technical challenges of architecting and building the city, building out the technological stack that would drive the two metahumans, and being able to feed the form data automatically, I prepared for the moment where I would first see the characters come to life. The first time I ran the simulation, I only had the form data of my own character and an empty form response for the so-called “ex”. I was floored by the output which seemed to almost immediately hit the nail on the head, with the “ex” avatar immediately questioning “Parag, why did you create this game to understand this relationship? Couldn’t we have just gone to therapy like normal people?”:

The final output isn't just the simulation itself, but a documentary navigating the unsettling, sometimes absurd, and, more often than not, emotionally draining process of building the work and inviting my exes to join me in the simulation. It includes documentary footage of the sometimes deeply resonant conversations between the AI avatars; panoramic views of the autonomously functioning simulated city; and crucially, documentary-style footage from the real world as my former romantic partner and I watch the simulation together for the first time. This includes recorded conversations, interviews, and reflections captured in my home and studio, over the phone, and via text messages, featuring myself, collaborators, and with their consent, some of the actual ex-partners whose data informs the simulation.

Conceptually, the work draws direct inspiration from family constellation therapy, Bert Hellinger's psychotherapeutic method where participants physically embody family members to externalize one another’s trauma and reveal hidden systemic dynamics, allowing unresolved patterns of grief, loyalty, and exclusion to surface and be addressed. Conflicts in some manner attempts to digitize this process, replacing human stand-ins with AI agents populated by personal data. It proposes a form of "digital therapeutics," using computational modeling not necessarily to heal, but to represent and externalize internal conflict, making the dynamics visible in a new, albeit deeply mediated, form.

However, this externalization is fraught with ethical tension, deliberately engaging with the uncomfortable questions surrounding consent and digital agency in the age of surveillance capitalism. The act of requesting ex-partners to complete surveys, modeled by myself, and having sole control on how this information will then transform their very intimate and personal reflections and opinions into training data for AI models, mirrors the pervasive extraction of human experience that fuels contemporary AI. I aimed to deliberately provoke questions about data ownership, the boundaries of the self, and the power dynamics inherent in modeling others. Who gets to create the simulation and model of oneself? Whose version of the past informs the code?

The power relationship is intentionally problematic. In creating these digital doppelgangers, often without the participants fully grasping the emergent, unpredictable nature of the LLMs' behavior, I highlight the asymmetrical power inherent in data collection and algorithmic modeling. The digital consent form I authored mimics the opaque complexity of standard terms-of-service agreements, underscoring how readily we cede digital agency. This isn't just about explicit consent; it reflects the broader reality that our digital actions, down to the individual keystrokes being modeled by the same underlying autoregressive algorithms powering LLMs, when further combined with sensor data, are already contributing to some of the most powerful predictive models of our behaviors that we don’t even access to.

The historical precedent of Joseph Weizenbaum's ELIZA (1966) provides a stark contrast and amplifies the concern. Weizenbaum was alarmed by the emotional connection users formed with his simple pattern-matching chatbot. Today's LLMs in Conflicts are exponentially more sophisticated, capable of generating far more convincing illusions of understanding, empathy, and nuanced conversation, making the simulation of intimate partners profoundly uncanny and ethically complex. The "ickiness" I acknowledge in the work is critical; it's an affective response designed to make tangible the often invisible, invasive nature of data extraction and AI simulation, especially when applied to the raw material of personal history and trauma.

By deliberately operating in the ethically gray borderlands between consent and exploitation, personal memory and algorithmic abstraction, Conflicts aims to produce what Donna Haraway calls "situated knowledge." It acknowledges its own complicity in the systems it critiques, performing the problematic dynamics of data extraction and simulation rather than offering a sanitized commentary. Invoking Audre Lorde, it’s an attempt to use the master's tools, in this case, LLMs, not to dismantle the master's house, but to make its architecture, its biases, and its profound impact on our personal lives unsettlingly visible.

Working on Conflicts has been an emotionally taxing process, speaking on behalf of all parties. Speaking for just myself, it required significant breaks (including the current one), and parallel engagement with traditional therapy (yes, for everyone curious, I am in traditional forms of therapy too). Confronting these simulated relationships, while understanding the nuances of past traumas through this "machine made mirror," often left me feeling fragmented and full of doubt. Still, it opened windows and resolutions I am still processing how to write about.

One of the earliest revelations was, perhaps unsurprisingly, that despite the simulation’s sophistication, it starkly exposed the absence of genuine emotion, revealing how a dialogue driven purely by rhetoric and mutual understanding differs profoundly from the texture of felt experience. As one ex had put it, half jokingly though half seriously as soon as I asked her to be a part of the work, “Oh hell yea! I’m going to fucking win, I will get everyone on my side. I’m going to crush you!” to which I laughed and replied, “but it’s not a competition!”

After trying a few test simulations with some friends that agreed to be part of the work, a deeply intimate exercise I was certainly not prepared for, I realized how right she was, as the form of conflict for NPCs is purely rooted in a language devoid of poetics. Even though they may appear to be embodied, these are only empty vessels that contain no sense of emotion, not even non-verbal communication, or a hint of inspiration. What is left in generated dialogue therefore is an exacting conversation, without any trauma, excitement, or even a sense of resolution in the way to color one character’s perspective to the other.

However, that does not mean there is nothing to be learned from such an exercise. What further emerged from Conflicts is an understanding of narrative not as fixed, but as procedural and generative. The system created by two narrative automata doesn't seek the homeostasis of resolution nor the trap of being stuck in one’s own trauma, unable to empathize with the other; it thrives on the disequilibrium of perpetual conflict, constantly unfolding new possibilities from the implicate order of the LLMs' latent space. Each simulated conversation reconfigures the probability landscape in a way that is effortless, demonstrating how computational logic can structure not just external interactions, but potentially help act as a mirror, opening the doors of perception, for navigating one’s own internal emotional landscapes and memories.

In the coming months I hope to distill more of this into a documentary form as I work through the remaining footage and compile a short film detailing my experience. For now, I will leave you with another quote from another ex who spoke of the work: “I better be the final boss”.

In the next part of this essay (Part 4), I dive into "The Game of Whispers," a generative video game expanding the concept of narrative automata to a historical simulation set during the reign of Shah Jahan. This work features twenty AI-powered NPCs who interact, form alliances, spread misinformation, and even plot against one another, creating a complex social ecology that reveals how narrative truth is constructed and corrupted through networks of communication. I’ll examine specific emergent behaviors—such as strategic deception and spatial awareness—and see how these systems model the spread of disinformation at scale, offering insights into how today's AI technologies might already be reshaping our collective understanding of truth and history.

And if you missed the earlier parts of this essay, be sure to catch up here: [Introduction: The New Landscape of Consciousness Part 1 / 6] and [From Automata to Autopoiesis Part 2 / 6].